What is a reverse proxy and why should I use it?

First of all – why use a reverse proxy to serve the app when we already have Kestrel inside the .NET Core?

While Kestrel is not a bad choice when you’re developing the app, it’s not really suited for use in production environments, where multiple users will access the app.

That’s where the reverse proxy comes in. A reverse proxy is a server that accepts the request from clients and forwards them to the backend server(s). This allows us to configure a load-balancing reverse proxy, for example when we have multiple backend servers or simply run multiple APIs that are hosted on the same server and will run on different domains. That wouldn’t be possible with Kestrel, since it would occupy port 80 and/or 443.

With this knowledge, we can now start configuring our server.

Prerequisites

To complete this tutorial you will need a server with Ubuntu 20.04 with an external IP address and preferably a domain that is directed to that IP.

NGINX installation

NGINX is an HTTP server that will work as a reverse-proxy for our use case.

Installation is pretty straightforward, first, let’s update our package repositories, so we will get the latest versions.

sudo apt-get updateThen just install the NGINX server with the command

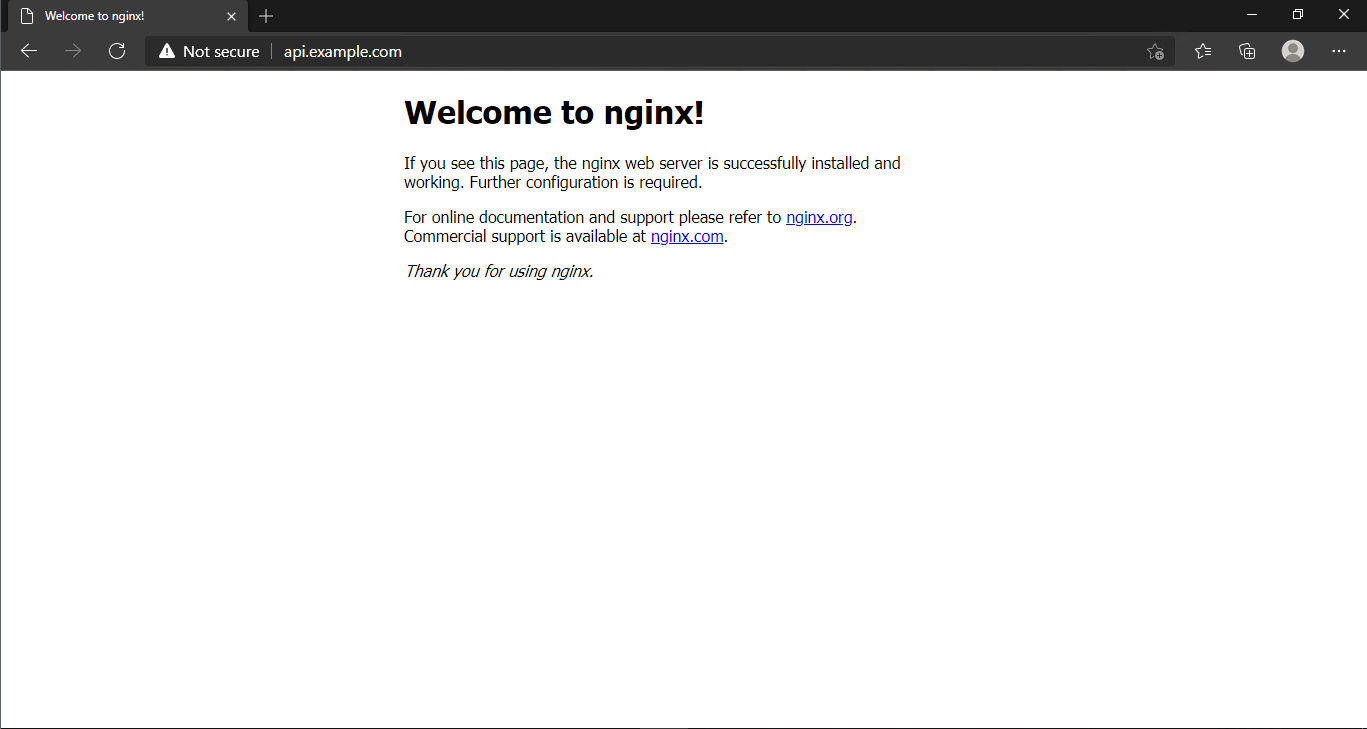

sudo apt-get install nginx -yNow let’s check if the server is working. Just go to your domain name in your browser and you should see the NGINX welcome page.

After this is done, we can move on to configuring NGINX to serve as a reverse proxy.

Configuring NGINX

Firstly, we need to create a .conf file for our domain.

Let’s create one by typing

sudo nano /etc/nginx/sites-available/000-api.example.com.confIt’s a good idea to number and name the files like that to find them easier later on and access the right file for the right domain name.

After that just copy and paste the code below to that file, changing the domain name to yours.

# reverse proxy

server {

listen 80;

listen [::]:80;

server_name api.example.com;

location / {

proxy_pass http://localhost:5000;

proxy_http_version 1.1;

proxy_cache_bypass $http_upgrade;

# Proxy headers

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection keep-alive;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Port $server_port;

proxy_hide_header X-Frame-Options;

# Proxy timeouts

proxy_connect_timeout 60s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

}

}

Now let’s explain what happens here. Firstly we declare the ports to listen on, which will be 80 for plain HTTP traffic, then we set the server_name to your chosen domain name.

In the next section, we have the location section, in which we declare where NGINX should look for your backend server. It doesn’t need to be localhost, but that’s what we’re working on within this example.

Later we set some headers that will help our backend server know what is going on with the request.

In the last section, we set up timeouts for the reverse proxy. Whenever proxy connects to the backend server, it will wait for that time and if it fails it will return the 502 error.

After that we need to link the file from sites-available to sites-enabled to enable the site, we can use this command to do that:

sudo ln -s /etc/nginx/sites-available/000-api.example.com.conf /etc/nginx/sites-enabled/000-api.example.com.confAfter creating new configuration or modifying the existing one, a good practice is to run

sudo nginx -tWhat it does is tests the current configuration for any errors and if there are any, it shows where they are. This is useful when we already have some apps deployed and don’t want to interrupt them.

If no errors are reported, all that remains now is restarting NGINX and the API should be working properly. Restart can be performed by typing

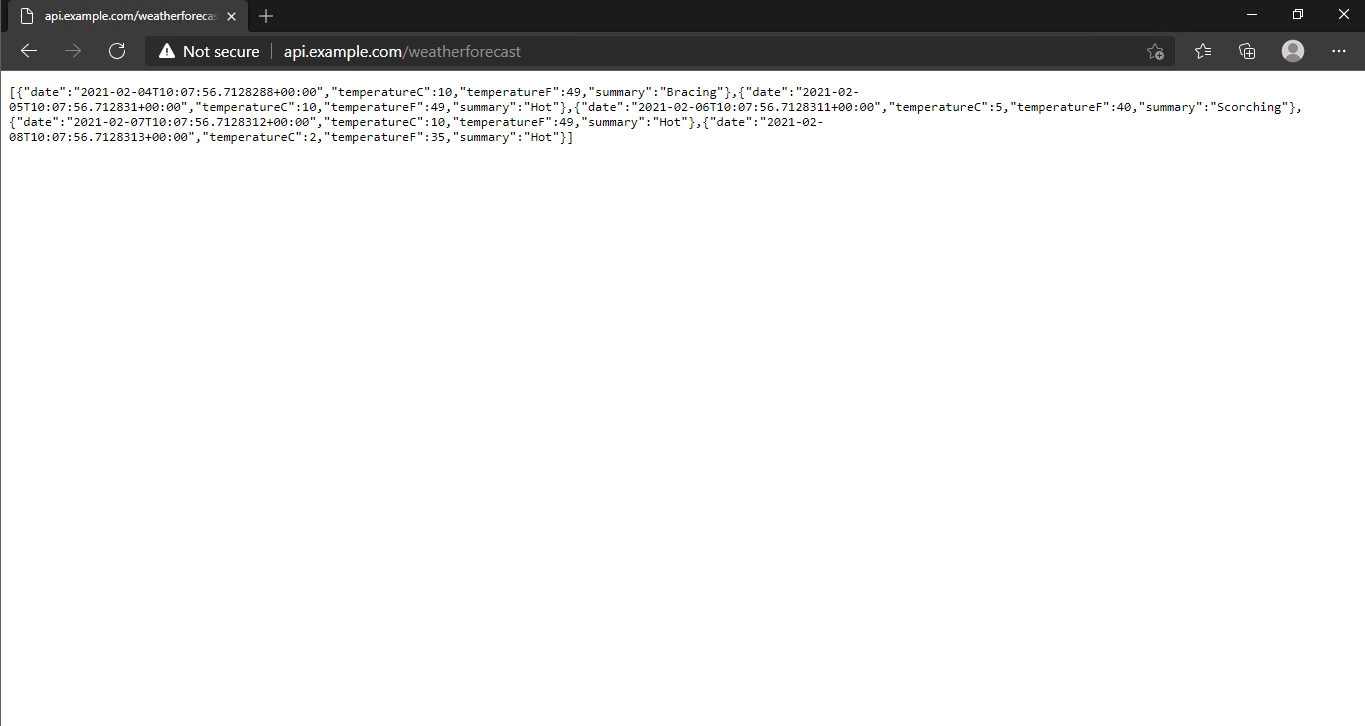

sudo service nginx restartinto the console. After all that you should be able to see the API running on your domain just like it would using Kestrel or IIS.

This is the end of Part 1 of this tutorial. In the next part, we will tackle running the app as a daemon, so it does not have to be running in the terminal, as well as securing our server with a free SSL certificate from Let’s Encrypt. Stay tuned!

Be First to Comment